Rethinking Scale: Theseus for Workloads Big and Small

By Rodrigo Aramburu

Rodrigo Aramburu

Author(s):

Published: July 10, 2025

9 min read

Positioning Theseus exclusively for 10TB+ workloads restricted our scope and shrank our addressable market. This was intentional. Theseus was built to make use of the latest and greatest software + hardware coming out of NVIDIA, and that just doesn’t exist on the cloud (GPUDirect Storage, NVLink, Infiniband, etc.).

Initially, our go-to-market strategy targeted the most demanding use cases and those that justified the cost of enterprise-class GPUs, leveraging state-of-the-art DGX/HGX architectures. But as GPUs proliferated across public clouds and prices dropped, customers kept asking for a cloud-native Theseus.

Until recently, we simply couldn’t hit the cost/performance thresholds needed to justify cloud deployments.

In this latest release, we’ve unlocked dramatic cloud performance gains, so we figured we should show our latest cloud performance from as low as 1GB to 30TB. The feedback from our benchmarking blog, which compares DuckDB to Theseus, was clear: “Theseus doesn’t perform well at small scale.” In the last few sprints, and by harnessing affordable NVIDIA T4 and L4 GPU instances on AWS, Theseus performs incredibly well at both small and large scales. Because Theseus is a distributed runtime, you can simply add more GPU resources to dial in the optimal balance of cost and performance.

Full view of the benchmark results

| Engine Config | AWS EC2 Instance | Nodes | Cluster Cost per hour ($) | SF1 Runtime (s) | SF1 Cost ($) | SF10 Runtime (s) | SF10 Cost ($) | SF100 Runtime (s) | SF100 Cost ($) | SF1000 Runtime (s) | SF1000 Cost ($) | SF10000 Runtime (s) | SF10000 Cost ($) | SF30000 Runtime (s) | SF30000 Cost ($) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DuckDB | r5d.xlarge | 1 | $0.29 | 43.791 | $0.0035 | 146.683 | $0.012 | 1412.660 | $0.11 | X | X | X | X | X | X |

| DuckDB | r5d.8xlarge | 1 | $2.02 | 38.609 | $0.03 | 60.873 | $0.039 | 329.682 | $0.21 | 2,439.992 | $1.56 | X | X | X | X |

| DuckDB | r5d.24xlarge | 1 | $6.91 | 42.536 | $0.08 | 56.858 | $0.109 | 188.872 | $0.36 | 1,435.711 | $2.76 | 21,971.26 | $42.19 | X | X |

| Theseus [T4 GPU] | g4dn.xlarge | 1 | $0.53 | 24.006 | $0.0035 | 67.521 | $0.010 | 758.151 | $0.111 | X | X | X | X | X | X |

| Theseus [T4 GPUs] | g4dn.xlarge | 2 | $1.05 | 30.973 | $0.0091 | 66.059 | $0.019 | 411.684 | $0.120 | X | X | X | X | X | X |

| Theseus [T4 GPUs] | g4dn.xlarge | 4 | $2.10 | 32.004 | $0.0187 | 56.279 | $0.033 | 227.448 | $0.133 | 945.361 | $0.553 | X | X | X | X |

| Theseus [L4 GPU] | g6.4xlarge | 1 | $1.32 | 22.295 | $0.008 | 64.188 | $0.024 | 400.375 | $0.147 | X | X | X | X | X | X |

| Theseus [L4 GPUs] | g6.4xlarge | 2 | $2.6464 | 28.422 | $0.021 | 64.696 | $0.048 | 334.022 | $0.246 | X | X | X | X | X | X |

| Theseus [L4 GPUs] | g6.4xlarge | 4 | $5.2928 | 30.090 | $0.044 | 50.052 | $0.074 | 187.512 | $0.276 | 258.548 | $0.380 | X | X | X | X |

| Theseus [L4 GPUs] | g6.4xlarge | 8 | $10.5856 | 30.105 | $0.089 | 50.221 | $0.148 | 125.047 | $0.368 | 166.457 | $0.489 | X | X | X | X |

| Theseus [L4 GPUs] | g6.4xlarge | 16 | $21.1712 | 36.525 | $0.215 | 42.762 | $0.251 | 93.334 | $0.549 | 135.356 | $0.796 | 750.14 | $4.411 | X | X |

| Theseus [L4 GPUs] | g6.4xlarge | 32 | $42.3424 | 49.606 | $0.583 | 52.987 | $0.623 | 87.540 | $1.030 | 116.476 | $1.370 | 450.03 | $5.293 | 1,442.482 | $16.966 |

Benchmarking Setup and Methodology

Below are TPC-H benchmark results comparing DuckDB’s and Theseus' total runtime and cost to complete these jobs at various scale factors from AWS S3.

Data

- All queries for both engines are run off Parquet files stored in AWS S3. That data is never pulled or pre-loaded onto local drives.

- That data is not pre-sorted or prepped in any way. The order it comes out of the TPC-H dbgen is the order it is converted into Parquet files.

Compute

- DuckDB - We are using different sizes of r5d EC2 instances from .xlarge-.24xlarge.

- Theseus - We are using different node counts of g4dn and g6 EC2 instances, ranging from 1 node up to 32 nodes.

Queries

- We’re just running the TPC-H standard queries with no rewrites exactly the same on both engines.

If you read our benchmarking report, you’ll know we’re not fans of using a simple column chart to compare two systems (see our methodologies). It typically supports cherry-picking of results that benefit the publishing vendor. Unfortunately, it’s not always clean and easy to compare data systems fairly using the SPACE chart. For simplicity and brevity, we will use bar charts to compare Theseus with DuckDB and provide ALL OF THE DATA (even what we didn’t plot) used to build the charts.

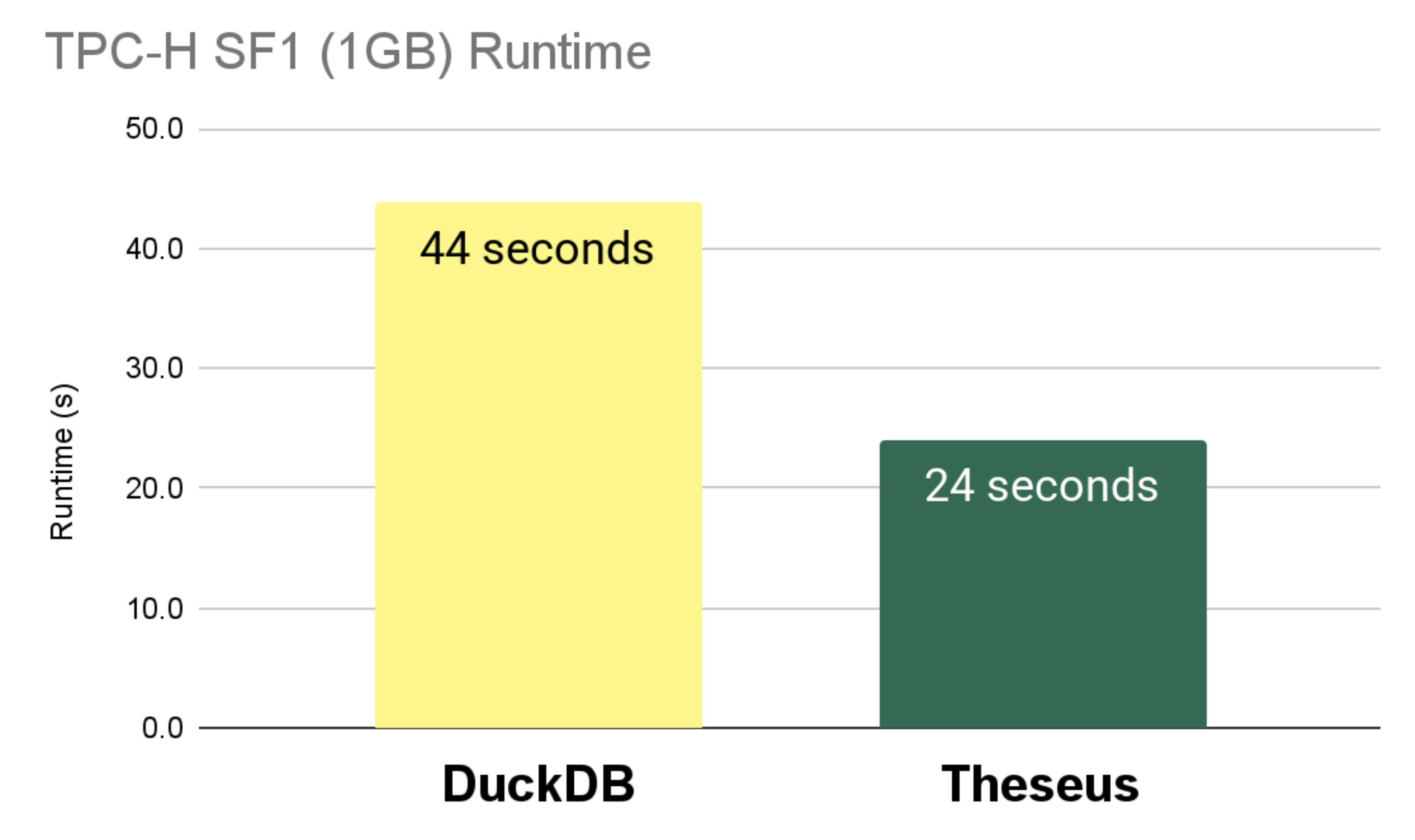

Results: TPC-H SF1 (1GB)

We’re starting at a small scale factor because this is where we have rarely shown data. You can see below that DuckDB and Theseus effectively cost the same. We would recommend using DuckDB due to the simplicity of spinning it up and getting going.

AWS EC2 Instance

- DuckDB - 1 x r5d.xlarge ($0.29 per hour)

- Theseus - 1 x g4dn.xlarge ($0.53 per hour)

| Engine | Runtime (s) | Cost ($) | Factor |

|---|---|---|---|

| Theseus | 24.0 | $0.0035 | 1.000 |

| DuckDB | 43.8 | $0.0035 | 1.824 |

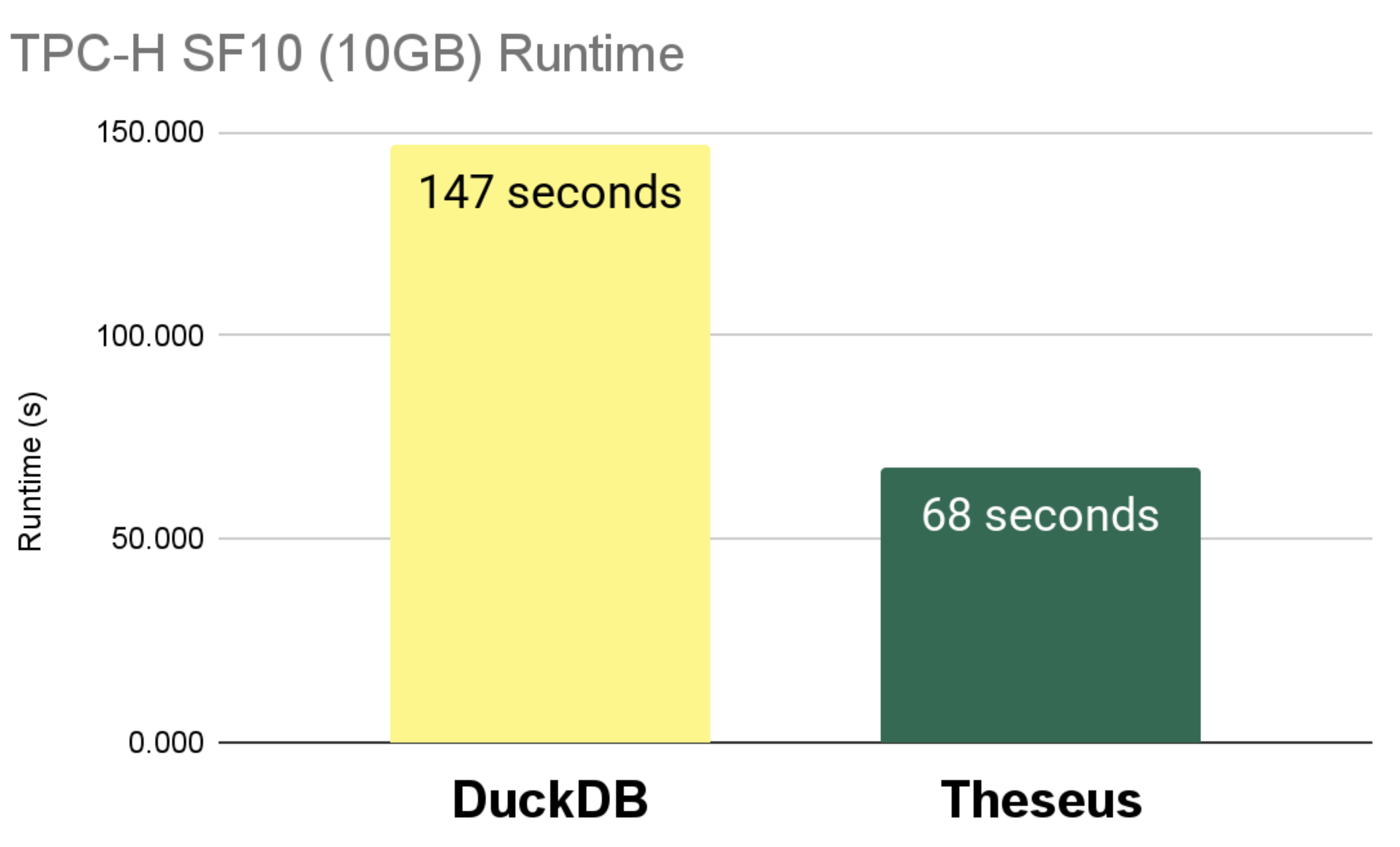

Results: TPC-H SF10 (10GB)

At 10 gigabytes, the divergence starts to get a little larger, where the performance might make sense, and the TCO is marginally better for Theseus, but very minimally.

AWS EC2 Instance

- DuckDB - 1 x r5d.xlarge ($0.29 per hour)

- Theseus - 1 x g4dn.xlarge ($0.53 per hour)

| Engine | Runtime (s) | Cost ($) | Factor |

|---|---|---|---|

| Theseus | 67.521 | $0.010 | 1.000 |

| DuckDB | 146.683 | $0.012 | 2.172 |

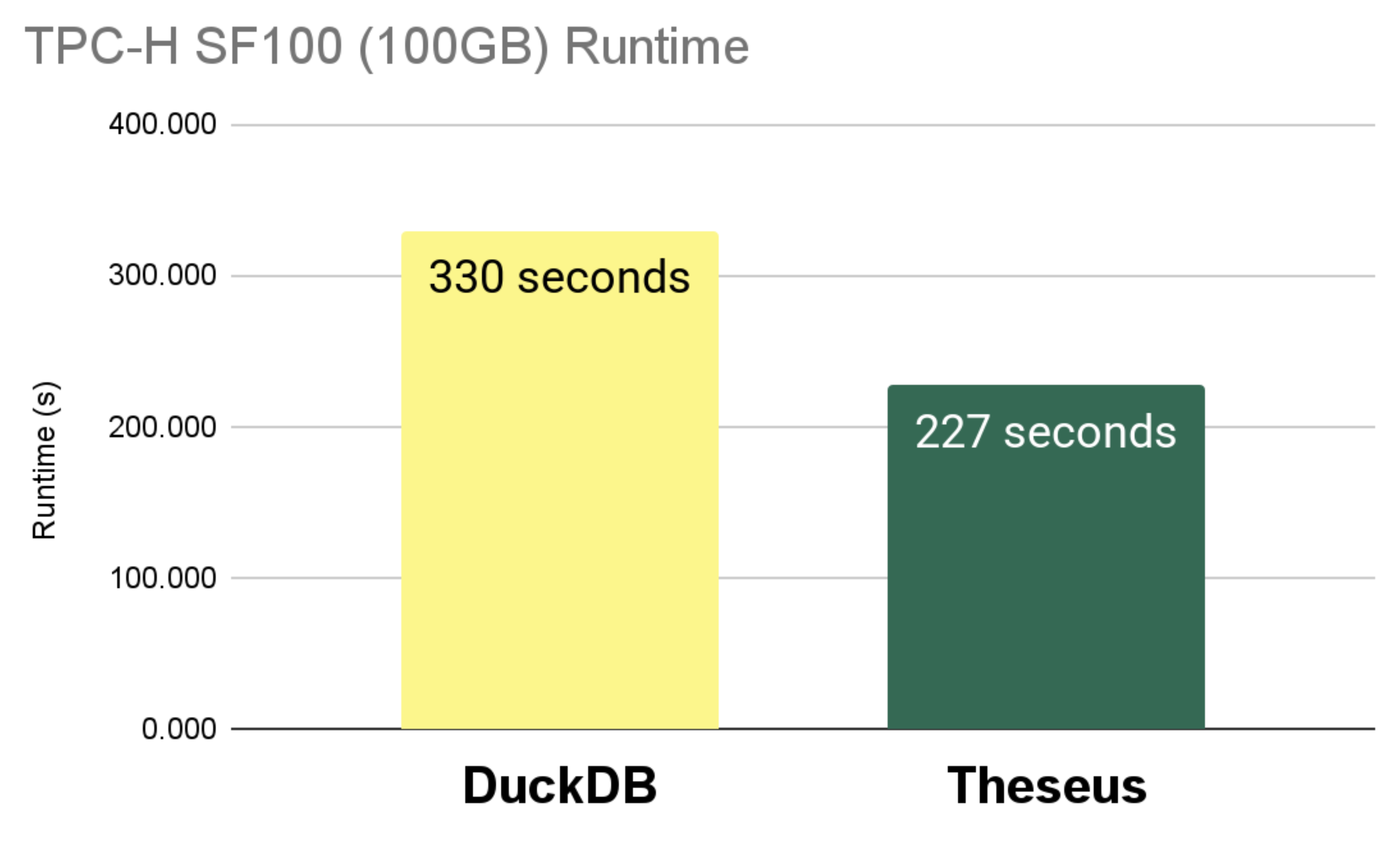

Results: TPC-H SF100 (100GB)

As we continue to scale, it makes sense to scale up the DuckDB and Theseus instances, which, as the raw data demonstrates, are not the cheapest runs, but the performance delta just makes them the most “reasonable” choice.

AWS EC2 Instance

- DuckDB - 1 x r5d.8xlarge ($2.02 per hour)

- Theseus - 4 x g4dn.xlarge ($2.10 per hour)

| Engine | Runtime (s) | Cost ($) | Factor |

|---|---|---|---|

| Theseus | 227.448 | $0.133 | 1.000 |

| DuckDB | 329.682 | $0.21 | 1.449 |

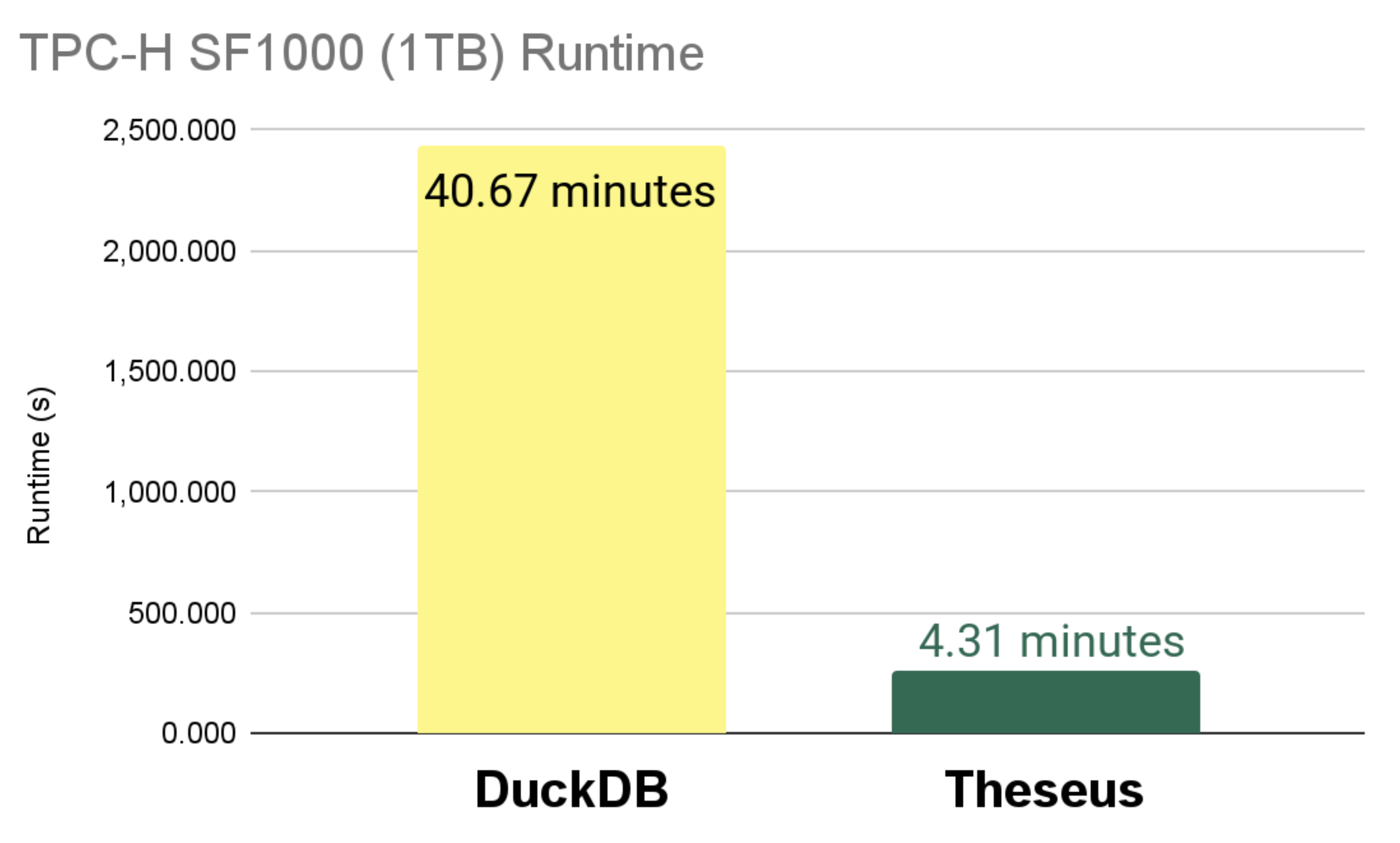

Results: TPC-H SF1000 (1TB)

As we often say, the more we scale, the more Theseus makes sense, where the difference in performance and costs begin to greatly exceed what is possible today with CPU-based engines.

AWS EC2 Instance

- DuckDB - 1 x r5d.8xlarge ($2.02 per hour)

- Theseus - 4 x g6.4xlarge ($5.29 per hour)

| Engine | Runtime (s) | Cost ($) | Factor |

|---|---|---|---|

| Theseus | 258.548 | $0.380 | 1.000 |

| DuckDB | 2,439.992 | $1.56 | 9.437 |

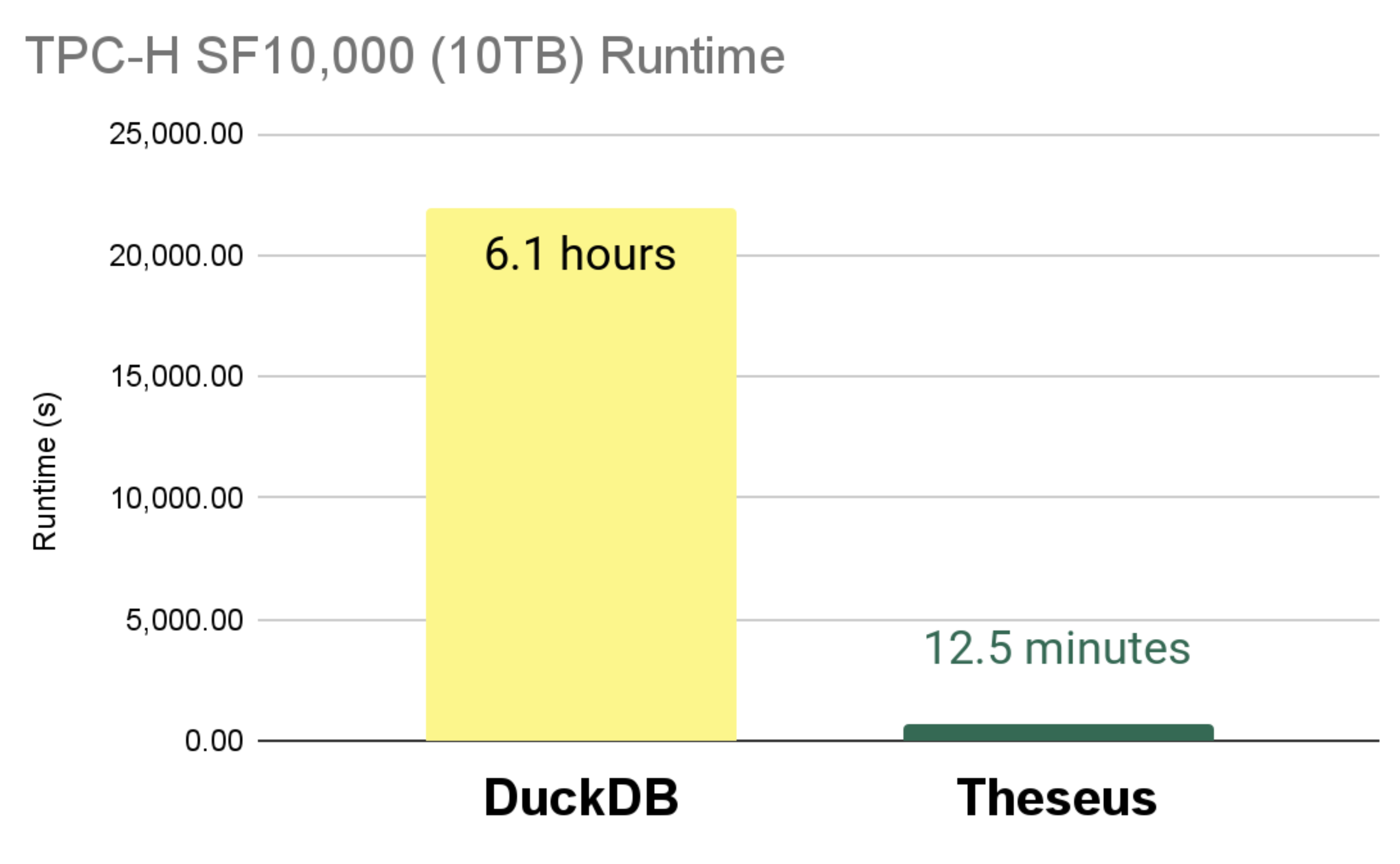

Results: TPC-H SF10,000 (10TB)

As expected, the performance differential on very large data sets becomes very high, where the TCO and the performance require a rethink of how these systems should be done. In this case, we could only scale up the DuckDB instance vertically with a larger server, but that has limits, while with Theseus, we can scale our distributed runtime to 16 x L4 GPUs and optimize performance and cost.

AWS EC2 Instance

- DuckDB - 1 x r5d.24xlarge ($6.91 per hour)

- Theseus - 16 x g6.4xlarge ($21.17 per hour)

| Engine | Runtime (s) | Cost ($) | Factor |

|---|---|---|---|

| Theseus | 750.14 | $4.411 | 1.000 |

| DuckDB | 21,971.26 | $42.19 | 29.290 |

Wrap-up

Contrary to our messaging for the past couple of years, Theseus performance scales seamlessly to fit your workload from 1 GB to 30 TB on cost-effective T4 and L4 AWS EC2 instances. We intend to post a lot more numbers soon, but figured we would share this as soon as we got the numbers ourselves.

Keep up with us