Feb 16, 2023

New Contribution to GitHub Actions Increases DevOps Flexibility, Performance

Ian Flores Siaca, Álvaro Maldonado Mateos

At Voltron Data we actively contribute to Apache Arrow – it is a fast-growing project with many active contributors. Arrow sees about four releases per year and leading up to releases the number of Continious Integration (CI) jobs increases to around 2,000 per day. GitHub donates 180 concurrent GitHub-hosted runners to the Apache Software Foundation (ASF) which Arrow is part of. Because these concurrent hosted runners are shared across Apache projects, we often face long queue times – at times surpassing five hours.

Voltron Data strives for stability, efficiency, and scalability. From open source communities to large-scale enterprises, we design systems to make working with technology easier, without limitations when it comes to infrastructure. A robust Continuous Integration/Continuous Deployment (or, CI/CD) DevOps system is vital to our success. Our team continues to drive new capabilities for our internal use as well as the needs of the larger community.

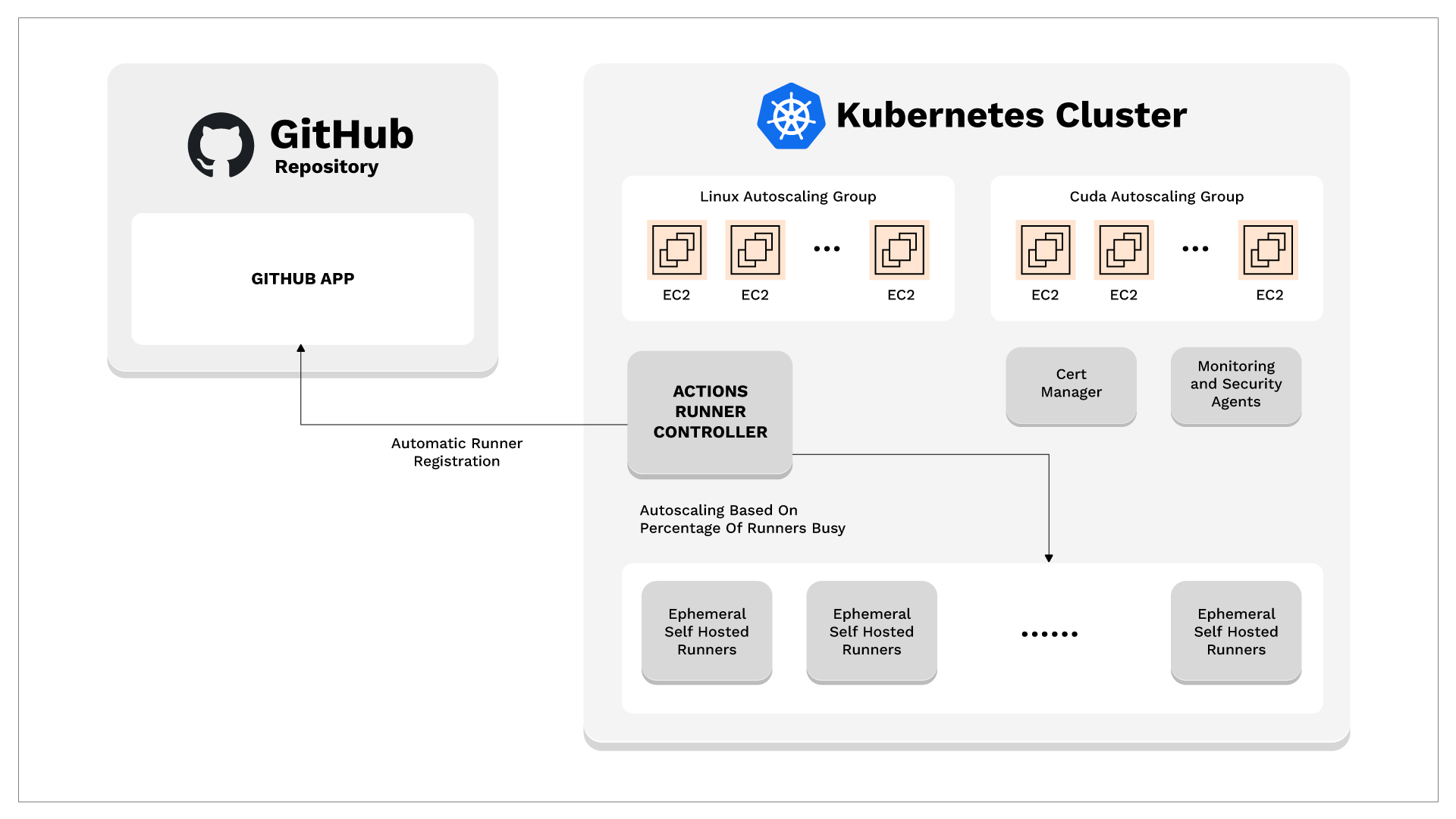

This is why we’re excited to share a new solution for GitHub Actions Runner Controller. In a nutshell, it’s a wrapper that lets you add runners to open source projects (like Apache Arrow - but more on that later) so you can work faster on development. Importantly, it lets you embrace hardware and platforms that GitHub-hosted runners don’t support, like GPUs and ARM architectures.

You can access the package here. To learn about our approach and understand how to implement and maximize the solution, continue reading.

Our Approach

We decided to explore the possibility of self-hosted runners to increase efficiency. We considered two solutions:

- The first option uses AWS Lambdas to launch a container to execute jobs.

- The second option is based on an Actions Runner Controller - running in Kubernetes which can operate both Linux and Windows nodes.

The second option offered more advantages to us. Below is a breakdown of the features this solution offers:

Auto-scaling

· · · · · ·

The solution can scale down to zero, which allows us to deploy high-cost instances like NVIDIA GPU runners and then remove the runners when they are not in use. It can also scale up to support pipelines that see an increased number of jobs being submitted.

Runner image customization

· · · · · ·

Pre-install software requirements for timing improvements. The OCI image can be customized with known dependencies to reduce the execution time.

Rightsize runners for optimal price-performance

· · · · · ·

The resources that the runners consume can be right-sized to fit more runners per node or to have more capacity and fewer runners. This is controlled through YAML files so it’s straightforward to make the necessary changes.

Enhance security with no need for direct internet access

· · · · · ·

The nodes of the Kubernetes cluster are deployed in a private subnet with no direct inbound internet access so the runners are protected from external attacks. The way to interact with the runners would be through the GitHub API or the Kubernetes API, both having robust authentication mechanisms in place.

Enhance security with ephemeral runner

· · · · · ·

Uses ephemeral runners to run each job on a clean image, increasing security. Instead of re-using runners to execute new jobs, the Controller launches new pods in Kubernetes to execute the new jobs. This increases the security posture of the solution as a previous job will not be able to modify subsequent ones.

Optimize budgets

· · · · · ·

We observe that cost scales linearly with capacity. This makes it predictable for organizations that adopt the solution to forecast their spending to support their deployment.

How to Deploy the Solution

Now that we know the motivation behind this project and why we chose the option of the Actions Runner Controller, let’s go over the technical details of the implementation.

Setting up the Nodes

Note: We use Pulumi, an Infrastructure as Code framework, to deploy all the infrastructure for the solution.

- We first deploy a VPC with two private subnets and two public subnets. We need two private subnets for the NAT Gateway and we need two public subnets for the external-facing Load Balancer.

- Once the VPC is deployed, we provision an EKS cluster with an OIDC provider. This OIDC provider is the one that will allow an AWS service account to make requests to other AWS services from within the cluster.

- We then provision a Linux Managed NodeGroup with Autoscaling. Managed NodeGroups are the preferred deployment pattern because AWS is responsible for those nodes in terms of AMI patching as well as built-in auto-scaling and given that they are managed they will automatically join the cluster.

- Then, we provision an EC2 auto-scaling group for the Windows nodes. This is because Windows nodes can’t be provisioned using Managed Node Groups. This also means that we are responsible for the rotation of these AMI’s and for joining the nodes to the cluster.

- We join these Windows nodes to the cluster by creating an IAM role and attaching it as an Instance Profile to the nodes, then we modify the

aws-authConfigMap in Kubernetes to let the cluster know that this IAM role has authority on the server.

Both Managed Node Groups and the Autoscaling Groups can be dynamically autoscaled (based on different metrics) or autoscaled by schedule. Once both Groups of nodes are set up, we proceed to install the components within the cluster.

Installing Components

We use FluxCD which is “a tool for keeping Kubernetes clusters in sync with sources of configuration (like Git repositories), and automating updates to configuration when there is new code to deploy.” Every resource deployed within the Kubernetes cluster has been integrated with FluxCD to deploy any changes automatically; this includes: Actions Runner Controller, including the runners CRDs (RunnerDeployment, HorizontalRunnerAutoscaler), Cert manager, and AWS System Resources.

- To use FluxCD, we first bootstrap it to the cluster and the GitHub repository, which means that the cluster will have credentials to access files from the GitHub repository and deploy them.

- With this in place, we deploy the Actions Runner Controller and its dependencies.

- Once deployed, the Actions Runner Controller authenticates to GitHub using a GitHub App. It controls the self-hosted runners through Custom Resource Definitions (RunnerDeployments and HorizontalRunnerAutoscalers), allowing us to deploy the corresponding instances for each Operating System easily. Both RunnerDeployments use custom Docker images with pre-installed software. These Dockerfiles are provided to the community in order to facilitate the integration of dependencies that could improve their usage.

- Continuous integration (GitHub Actions) has been set up so that the images are built and pushed to the GitHub Container Registry and remain up-to-date with the GitHub repository.

This solution provides many ways of controlling how these runners automatically scale. We’ve tested the policy where the pods scale based on the percentage of runners busy, which is completely configurable. Currently, if more than 75% of the runners on the deployment are busy, it automatically scales up. When 30% or less of the runners are busy, it scales down.

Conclusion

Given the flexibility of the solution, many ASF projects, including Apache Airflow, are embracing it. The Apache Arrow project is adopting the solution to host both NVIDIA GPU and ARM runners, which are currently not available as GitHub-hosted runners. Because this solution scales down to zero, the project can save money on expensive runners. To check out our solution and give it a try, go to: https://github.com/voltrondata-labs/gha-controller-infra (currently under an Apache 2.0 License).